LiDAR Technology in Apple Vision Pro

Unlocking Spatial Computing

Discover how the Apple Vision Pro utilizes LiDAR technology, including insights into its design, components, and the adaptation from the iPad Pro 11. Explore the key features enabling advanced spatial computing in this innovative headset.

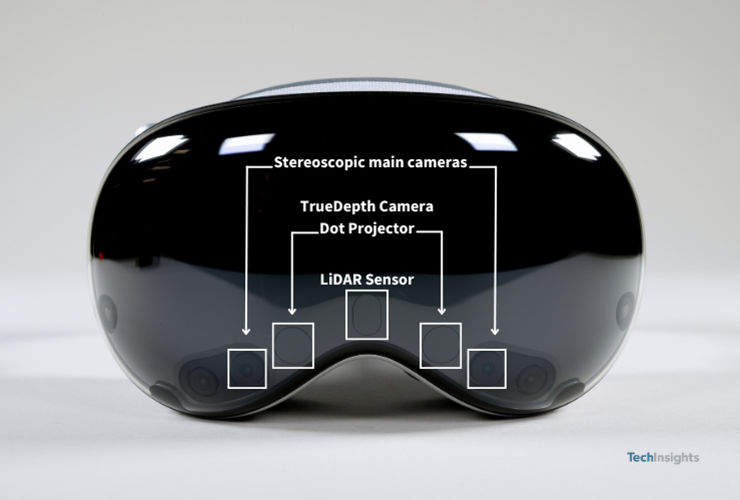

Spatial computing is a highlight of the new Apple Vision Pro headset. This device uses various sensors to integrate virtual and physical environments by sensing the three-dimensional structure of the user's space and eye movements.

The Vision Pro measures the 3D structure using a LiDAR (Light Detection and Ranging) device, a TrueDepth sensor, two main cameras for stereoscopic depth analysis.

The LiDAR module, positioned above the nose bridge, uses "Direct Time of Flight" technology. It emits a laser beam, and the reflected light is detected by a Single Photon Avalanche Diode (SPAD) sensor. The distance to an object is calculated based on the time taken for the light to return.

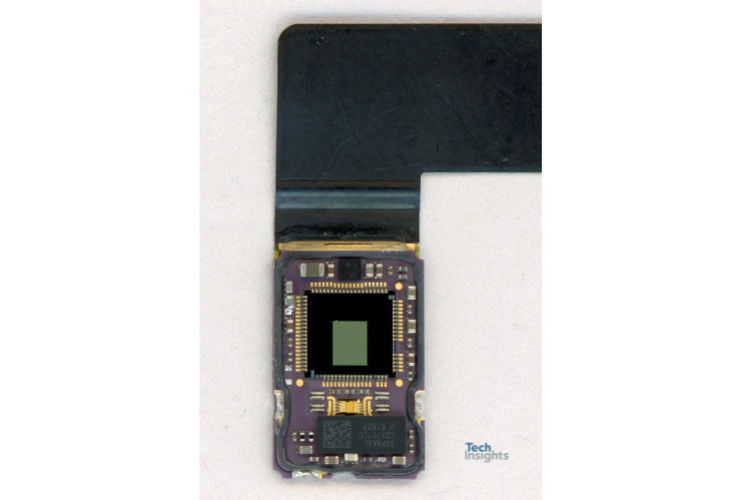

TechInsights analyzed the Vision Pro's LiDAR, revealing it reuses the design from the iPad Pro 11. Key components include Texas Instruments' VCSEL array driver, Sony's SPAD array, and onsemi's Serial EEPROM. The LiDAR operates at 940 nm and flashes twice per second, using lenses and filters to refine the light.

This established LiDAR technology from the iPad Pro 11 has been adapted for the Vision Pro, indicating it was likely field-tested for this advanced application.

Curious about the LiDAR technology in Apple Vision Pro?

Enter your email to register to the TechInsights Platform and access the analysis.