Teardown Blog

The Chip Insider®

SPIE ALP. Inventory out of control.

G. Dan Hutcheson

March 24, 2023

SPIE Advanced Lithography and Patterning 2023: This is the penultimate conference on advanced lithography. Like many things, it goes through cycles of emergent behavior transitioning between chaos and order. Back between immersion and EUV, it was in a chaotic state as lithographers couldn’t agree if scaling was dead or if EUV would even transition from being a science problem to an engineering one. As the chaos played out, and lithographers went in random directions, Moore’s Law actually stalled. Then EUV’s advance brought order, aligning lithographers to a common cause. As it did, presentations at ALP shifted to engineering solutions to making EUV work in manufacturing. This year marked the transition from making it work to making EUV work better and more economically (i.e. more revenue-per-wafer).

EUV has fundamental problems which limit its use. One is shot noise which creates rough line edges that result in die-value loss. Another is that every photon is precious at these wavelengths. One could say metaphorically that EUV photons are more valuable than gold (though I can’t attest to the accuracy). So much of the effort shown this year is to get more information out of each photon – hence lower its cost. Or make patterns cleaner – hence increase die value.

Reading media commentary since the show, I have to say many investors get this wrong… They believe that if you make EUV more cost effective it lowers demand and hence lowers market demand – a zero-sum view or one of statics, not dynamics. If this were true … Moore’s Law wouldn’t work. Lower price-per-component should have put the semiconductor industry out of business in the sixties. Obviously, it didn’t. What they’re missing is dynamics …

Martin van den Brink’s plenary was a superb example of all things ASML has been doing to make EUV more cost effective. He clearly does not see this as lowering demand. Moreover, as EUV productivity has risen ASML has received more orders for tools and its customers have committed more exposure layers to the technology. Hence, Jevon’s paradox in real time. This is why ASML has been so approving of …

Applied Materials’ Sculpta introduction: I can’t believe how many got this one wrong…

ASML introduces Micralign scanner…

Inventory out of control: Hi Dan, I have some questions about the IC Inventory data…

Maxims: Risk vs Uncertainty: Misreading situations of risk versus uncertainty in decision-making can result in bad decisions because the best method under one can be the worst under another. Who uses it right… Jim Morgan … Craig Barrett … Terry Higashi, … Martin van den Brink …

“If you don’t like what’s being said, change the conversation”

— Don Draper, Mad Men

Share This Post

SPIE Advanced Lithography and Patterning 2023:

This is the penultimate conference on advanced lithography. Like many things, it goes through cycles of emergent behavior transitioning between chaos and order. Back between immersion and EUV, it was in a chaotic state as lithographers couldn’t agree if scaling was dead or if EUV would even transition from being a science problem to an engineering one. As the chaos played out, and lithographers went in random directions, Moore’s Law actually stalled. Then EUV’s advance brought order, aligning lithographers to a common cause. As it did, presentations at ALP shifted to engineering solutions to making EUV work in manufacturing. This year marked the transition from making it work to making EUV work better and more economically (i.e. more revenue-per-wafer).

EUV has fundamental problems which limit its use. One is shot noise which creates rough line edges that result in die-value loss. Another is that every photon is precious at these wavelengths. One could say metaphorically that EUV photons are more valuable than gold (though I can’t attest to the accuracy). So much of the effort shown this year is to get more information out of each photon – hence lower its cost. Or make patterns cleaner – hence increase die value.

Reading media commentary since the show, I have to say many investors get this wrong. They believe that if you make EUV more cost effective it lowers demand and hence lowers market demand – a zero-sum view or one of statics, not dynamics. If this were true, technology would not have evolved over the centuries, because technology makes things cheaper, which should lower demand. Thus, high bandwidth communications should have lowered demand. I don’t know about you, but I’ve spent far more on communications in my family monthly than I ever did in the pre-mobile/internet days. Also, if it were true Moore’s Law wouldn’t work. Lower price-per-component should have put the semiconductor industry out of business in the sixties. Obviously, it didn’t. What they’re missing is dynamics, which can be seen in the economic principle of Jevon’s paradox. If you make something cheaper, people will buy more. When analysts talk about lowering demand as a static price point, they’ve forgotten basic economics: demand is not a price:volume point. It is a curve of points that shifts out and in over time. So is supply. The only time one ever sees the price:volume point is where the two curves cross. Thus, if the supply curve shifts right – hence lower prices for the same volume – more will be sold at the point the supply curve crosses the demand curve. Sorry for the economics lesson, but if you see someone quoted saying demand will drop because cost drops, just remember they have forgotten undergraduate economics.

Martin van den Brink’s plenary was a superb example of all things ASML has been doing to make EUV more cost effective. He clearly does not see this as lowering demand. Moreover, as EUV productivity has risen ASML has received more orders for tools and its customers have committed more exposure layers to the technology. Hence, Jevon’s paradox in real time. This is why ASML has been so approving of the introduction of Metal-Oxide dry resists (MOx) to replace CAR resists (Chemically Amplified Resist).

MOx also helps sustainability, which Mark Merrill of Lam argued. Its greater sensitivity means lower energy is needed to get the same wafers out. When it comes to sustainability, EUV is often seen as the bad princess of the fab. Ken MacWilliams talk noted that TSMC’s share of Taiwan’s electricity grid went from 4% to 7% after the introduction of EUV. Anything that makes more use of the energy from EUV photons lowers manufacturing cost while improving the carbon footprint of the tool, which was Mark’s point.

Florian Gestrein of Intel gave another great presentation along these lines. Additionally, his points about DSA rectification of EUV exposures for half-pitch scaling was an eye opener. He showed how they could eliminate much of the LER problem with DSA rectification (Line Edge Roughness and Directed Self Assembly). DSA has been stuck in the lab hoping to break into the fab for years. This could be its big break.

Applied Materials’ Sculpta introduction: I can’t believe how many got this one wrong. One analyst saw it as a threat to EUV demand because Applied focused on how it would lower EUV exposure cost by lowering the need for double exposure. Another said it was “just another etch tool.” Another said it was “no big deal.” As to the first, I will refer you to my comments on demand. As to the second, it’s not an etch tool because no resist needs to be put down before exposure. It’s not a stripper either because it is does not remove the entire film. It just trims edges of patterns. The closest thing to it is the laser trimmers used in test to alter analog device electrical properties. I still believe what I said about it being the first new tool/process combination introduced to the fab since CMP. Full disclosure, I could be biased as I’ve known about the technology for four or five years and encouraged its development. As for it being “no big deal”, it’s already in use on advanced nodes by the big three for critical layers. If that is not a big deal, I don’t know what is.

Notable Awards: Great to see Tony Yen receive the Frits Zernike Award for Microlithography and Wilhelm Ulrich the Rudolf and Hilda Kingslake Award in Optical Design. In my opinion, these two were critical to the success of EUV and, by extension, the continuation of Moore’s Law.

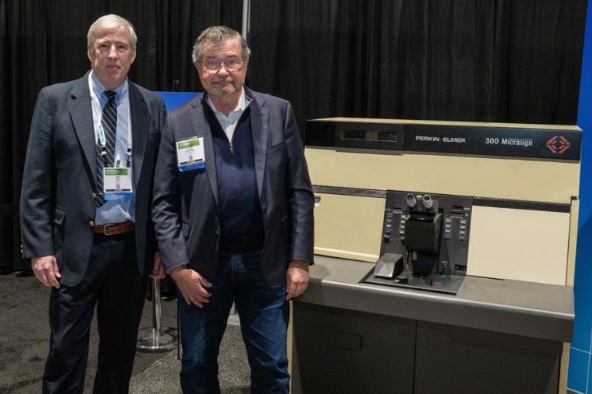

ASML introduces Micralign scanner: Now that I have your attention, a note of historical significance… Sage lithographer, Chris Mack, happened to remember that 2023 marked the 50th anniversary of the introduction of Perkin Elmer’s Micralign scanning projection aligner, which now via M&A is part of ASML. Chris suggested a celebration at ALP was in order. Tony Yen got involved, who got Martin van den Brink’s buy-in and one thing led to another. Tony scoured the industry and the country to find a system in Vermont. ASML had it cleaned up and shipped to the show floor. After which it went to ASML’s San Jose center for visitors to see.

ASML also had Chip Mason (shown below with me next to the system) present on the importance of the machine and the amazing breakthroughs the engineers needed to make it possible. If you’re interested in the throughline of history and how engineers make a difference, I highly recommend you chase it down (sorry, I failed to find the link on SPIE’s website).

This machine was a milestone in its day and an icon for the innovative spirit of lithographers worldwide. It also echoes what’s happening today in many ways. It came at time when Moore’s Law was being challenged to move from the era of mils to microns. Today it’s nanometers to angstroms. When the Micralign was introduced, lithography had hit a wall. Next-gen had failed to deliver the economic gains needed. Micralign changed that. Two years later, it would give Gordon Moore the confidence to predict a five-to-ten-year extension of the law named after him in his second seminal paper in 1975. More recently, it’s the breakthroughs surrounding EUV that have extended the window for how long out the industry can continue to extend density increases.

Happenings, Comments, Questions & Answers:

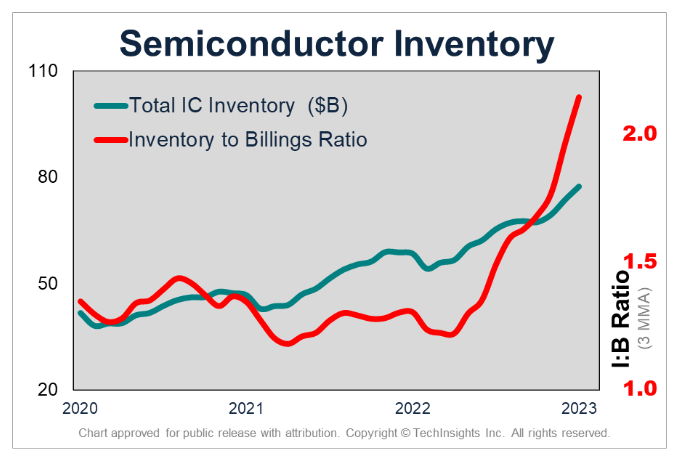

Inventory out of control: Hi Dan, I have some questions about the IC Inventory data. I have used that data for many years, especially since the “Financial Crisis” in 2008. I understood that the inventory to billings ratio was in a “Critical Level” once the ratio reached over 1.5. The current inventory to billings ratio makes the inventory crisis in 2008 look like child’s play. I have a few questions for you.

- Am I understanding the data correctly and is a ratio of more than a value of 1.5 still considered to be a “critical-level”?

- Do you consider the current ratio to be at unprecedented levels?

- I am confused with the ratio being so high and yet the Semiconductor/IC forecast is looking that there will be a recovery by Q4 2023. Can there still be a recovery if the inventory is so elevated?

- Are you assuming massive write-offs or that the Inventory will be burned off by then – When do you see the inventory ratio returning to below 1.5?

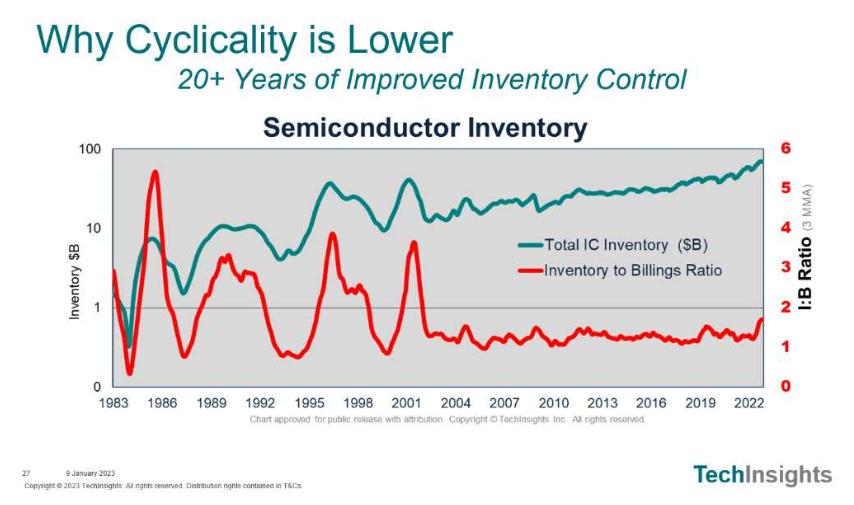

— Outstanding question! You’re right to be concerned. But I should caution that the 1.5 months was more a judgement call on our part back in 2008/9. That year was actually child’s play compared to previous cycles. At the time, we set the 1.5 based on the introduction of computer inventory control systems after the Y2K debacle being a critical infrastructure change. This was observed in much lower inventory volatility between 2002 and 2008 as a chart from my presentation at SEMI ISS shows:

Prior to ~2002, the swings were much wilder. Improved inventory control has been a significant factor in dampening the cycle since Y2K. A combination of computer inventory tracking and rigid Just-In-Time is what drove inventory down to its walk between 1.1 and 1.5.

What we don’t know today is the degree to which the recent 2.0 number is an anomaly resulting from the covid-shortage 1-2 punch on top of the shift from JIT to Just-In-Case and ASP inflation of inventory. We also do not know the degree to which confirmation bias and being burned by missing the shortage led executives to miss the warning signs that first appeared last June in Semiconductor Analytics. They were too cautious in 2020 and early 2021. This error and all the rhetoric about the never-ending importance of chips had to have emboldened them. Some continued to produce at high utilization levels even into this year. I have not seen such a huge lag between indicators and utilization since the 1990s.

Four of these factors are previously unseen. The fifth hasn’t been with us for more than 20 years – which is outside experience windows for most senior executives. Look at the math: if you’re a CEO today aged 60-65 today, the last time inventory build was ignored you were around 40 and probably in a technical management position. The bottom line is that it will take some time before these perturbations attenuate and we have a solid idea of where exactly the new ratio lies.

It’s also important to realize that I:B ratio peaks are lagging indicators for many reasons. Normally inventory is being built to forecasts of good times. When billings turn down, there is still 3-4 months production in the production pipeline. So inventory continues to rise as billings fall, hence the rise in the ratio. It does not invert until a recovery is almost underway. In the 2008 debacle, for example, the inventory inversion did not start until January of 2009, the same month as the billings bottom.

As a general rule, there must be a recovery for the I:B ratio ease, because inventories are an integral of billings flows. For inventories to decline, billings need to hold below OEM chip insertions into electronics. As those electronics are sold, inventory will be worked down. As that happens, then billings will rise, lowering the ratio. As for when I see the inventory ratio returning to below 1.5, the 3MMA was 0.7 above that level in January. 2020 is not a good comp since inventories were low going into the pandemic. In 2008/9, it took 12 months to work down 0.5 points. So we’re probably looking at sometime in mid-2024 if it even returns to 1.5. Given that inventory controls failed in 2020/21, the move to JIC, and the evidence of a lack of response until late in 2023, the new critical level may be somewhere closer to 2.0. – Dan

Maxims: Risk vs Uncertainty: Know which is the basis behind any decision that needs to be made.

Misreading situations of risk versus uncertainty in decision-making can result in bad decisions because the best method under one can be the worst under another. It’s how the world’s financial system almost collapsed between 2007 and 2008. The reason everyone was caught off guard is the banks allowed complex models used to calculate risk levels based on historical data that barely covered a full cycle, lulling them into complacency, while not accounting for the uncertain issues that could not be modeled. These uncertainties make it impossible to probabilistically weigh all future outcomes.1 It’s also why analysts in our industry often fail to forecast downturns, such as the one in 2019, where we were the only one posting a negative sign for the year in 2018.

Risk-based decisions should be made only when most, and possibly all, outcomes are known. In other words, an answer can be arrived at because its nature is stochastic and all the data is available. For example: hitting a light switch, investing in an exchange fund over a long period of time, flipping a coin, or gambling in a casino (ranked in order of a positive outcome). The probability of each can be arrived at statistically and reasonably accurate forecasts can be made.

Certainty-based decisions should be made when answers are deterministic and can be arrived at analytically with certainty. For example, you only need to check your bank balance with one bank. Here gathering the information statistically is costly and prone to significant errors. If you survey all the branches the answer will be the same with a variance of zero and 100% of the observations match the mean no matter how many branches you survey. If you took an average from different banks — for which you only have an account with one — your average balance would be the actual balance at your bank plus (N-1)*0 divided by N — also a bad error. Now, this may seem silly but people often make the mistake of applying statistical answers to problems that masquerade as stochastic when a better answer can be arrived at analytically. Most commonly this is when the sample size is known, but incorrectly assumed to be infinite … the latter of which is what is typically taught in schools.

Uncertainty-based decisions should be made when all possible factors and outcomes can’t be known. Here simple, heuristic, rules — or maxims — outperform statistically-based decisions. Especially when dealing with little databases. For example, early predictions that the semiconductor equipment industry was no longer cyclical were based on a few years of stability that barely made up a full cycle. The confidence level was raised by stuffing databases with fractional years. When you look at the history of downturns in our industry,2 it is clear that despite predictions a cycle will not happen because “this time it’s different,” they do in fact occur even though their causes are different each time. Not only are they different, they are chaotic attractors. Hence, a simple rule is they will happen — up or down — and then be opportunistically prepared to take advantage of them (good or bad).

Now, let’s look at some examples from the semiconductor industry:

Jim Morgan, the legendary leader of Applied Materials once told me he could never see more than six months out and yet he led the company to dominance over two decades. First, he made sure R&D was always developing products that met customer needs of value and he pruned the ones that did not when he first arrived. Then, he made sure he always had the cash to fund development and keep the company in the starting blocks through downturns. Jim was the first I ever heard use the term “Cash is King.” I don’t know if he invented it, but he certainly lived it. He always went for public offerings when times were good and tech stocks were high to have a full “War Chest” ready for any downturn. He also converted the profits from each successful system in the market to development dollars for the next. In contrast, many other semiconductor equipment companies that failed or became acquisition targets in cycles relied on debt funding — eventually learning the Swiss definition of a banker being someone who loans you an umbrella when it’s sunny and calls it back when it rains. Morgan went around uncertain times by being structurally sound enough to weather the storm while focusing on the sunny days that would inevitably come.

Craig Barrett is the executive whose leadership turned Intel’s manufacturing prowess around in the second half of the eighties. While he is often overlooked in the shadow of Andy Grove, Gordon Moore, and Bob Noyce, it was Craig who led them to become the largest semiconductor company in the nineties. Craig understood and believed in Moore’s dictum that “you can’t save your way through a downturn.” But under Craig’s watch, they faced the daunting need to grow when fab costs were growing ever higher. When faced with the problem of needing to plan capital investment against a history of wild utilization swings, Craig drew a straight line across the peaks in production and decreed they would invest strategically along this straight line rather than trying to respond tactically to fluctuations. This gave Intel’s management the advantage of having to make fewer short-term decisions, so they could focus on serving customers. It allowed them to place long-term orders for tools in downturns when prices were low and not have to scramble for capacity. It was also financially sound, using Andy Bryant’s financial maxim that it is always more profitable to have excess capacity than to be short of capacity.

Terry Higashi, the executive whose leadership turned Tokyo Electron from being a trade rep into the global semiconductor equipment giant it is today used his “Trusted Relationship Style” to guide the company to the top. He would win SEMI’s Sales and Marketing Excellence Award for this contribution to our industry. Terry’s sales maxim was introduced at a time when semiconductor company sales forces were viewed as being more akin to used car sellers than trusted partners. His focus on trust had the added effect of reducing uncertainty for his customers. Terry’s non-adversarial partnering style has become a mainstay of customer/vendor interaction due to the high cost, high effort, and high-risk nature of semiconductor technology.

Martin van den Brink’s “Share the pain” maxim came out of the 157nm lithography development disaster that would have buried any equipment company that didn’t. The share the pain maxim was simple: get the customer to have skin in the game by having them share product development costs. This typically hadn’t been done before. As development costs soared on entering the nano-chip era, equipment companies began to fail with product development failures. Customers with no skin in their development efforts would chastise them as failures to hide their poor guidance from management. That fate could well have happened to ASML had they not gotten customers to fund 157nm development. It had the added benefit of getting the industry to act as a single entity to cleanly shut 157nm efforts down and shift all its resources on immersion. “Share the pain” was also critical to ASML’s success in immersion and its ability to become the only company to succeed in EUV. Martin also won SEMI’s Sales and Marketing Excellence Award for his contribution to our industry.

As you can see, all have simple heuristic rules to deal with uncertainty that the entire organization could easily follow. Importantly, all these rules have little to do with technology even though these companies are all technology leaders. The important lesson is that using simple rules to deal with uncertainty lowers the opportunity time costs of applying complex models that are inherently inaccurate for dealing with uncertainty, which allows them to shift this time to providing solutions for customers.

- A.G. Haldane, The Dog and the Frisbee, Federal Reserve Bank of Kansas City’s 366th economic policy symposium, Jackson Hole 2012.

- G. D. Hutcheson, A Cyclical History of the Semiconductor Equipment Industry, The Chip Insider, 22 February 2019

Risk is when an outcome’s probability is known. Uncertainty is when an outcome’s probability is unknown. Know which is which before you weigh your decisions.

Timing is one of the most difficult problems of decision-making. Wait too long to get all the facts together and opportunity is lost, as it escapes to those who beat you to the punch. Move too early and what seemed to be an opportunity may be a mirage. The finer your ability to resolve risk from uncertainty and the better your ability to grade the facts at hand, the better will be your ability to time your decisions. Andy Grove once said he never regretted making a decision; he only regretted not making them earlier. That is the paradox of decision-making. No matter how good you get, once you know a decision must be made – it always could have been made earlier. But you couldn’t have known to make it without the information. This is why Andy is such a legend in decision-making: his timing was as good as it gets.

The first principle is to weigh the facts at hand: what are facts, what are probabilities, and what are faiths (remember you can’t have faith if you know). Facts have no assigned probability. Information that you can assign a probability is a step down in quality. For example, today’s weather is a fact. As for tomorrow’s, there may or may not be an assignable probability. But once tomorrow is over, it will be a fact. An investment is when you know the odds favor you. A gamble is when the odds don’t favor you. Information is actionable. Data is not. Once information becomes known, it ceases to be information and becomes data. For example, if you knew which stocks would rise tomorrow . . . that would be information, as you can act by buying these stocks. But once tomorrow passes, this information will be known by everyone – making it data to be used in search of more information. Uncertainty is where no probability can be assigned. This is where faith comes into the decision-making process. Faith is based on beliefs that come from either experience or are passed down from others you respect.

For example, we can assign a specific probability to flipping a coin. We cannot assign a probability to the continuance of Moore’s Law or Murphy’s Law as there are parts to the underlying mechanism for their continuance that we cannot comprehend with certainty.

Let’s look at Intel and how it has institutionalized its decision-making process as an example of a great success formula. They have faith in both Moore’s and Murphy’s Laws. Murphy’s Law drives how they approach manufacturing. They never know where Murphy will strike, but by mistake-proofing their operations, they systematically eliminate the potential for Murphy to raise his head. As for Moore’s Law, the odds are high it will continue because it has always continued. The known facts, first documented in Gordon’s paper, are that it is made possible by shrinking critical dimensions. Faith comes in where one must believe in the continuing ingenuity of the engineers and scientists in our industry to make this possible and then the ingenuity of the designers to use the added transistors in a way that will appeal to those who buy ICs and ultimately, electronics. It is a general rule that faith turns to information and information turns to data. Seldom do things flow the other way. Intel applied this rigorously. So when they do have surprises, they are able to quickly align an organization of thousands around a systematic vision based on their deep understanding of decision-making.

Morris Chang’s “Never compete with your customer” is another great example of faith-based decision-making. Morris saw fundamental problems as he experienced what happens when an IDM offers what we now call “foundry services” at previous employers such as TI and UMC. He believed the goals of both were ultimately conflicted for many reasons. Hence, true partnering with strong trust bonds was far harder to achieve. He built TSMC on the faith it could be successful if the brand meant they would never compete with its customer by selling chips. It would only sell foundry services. The trust built enabled fabless giants to emerge like Qualcomm, Avago, Xylinx, and Nvidia. They could go all in because they didn’t have to worry about TSMC stealing their designs or shifting fab capacity away to build their own products when an upturn came. Then came Apple. No fabless giants emerged before Morris’s foundry model was conceived. They only came after – all working with TSMC.

For more, search the ‘Maxims of Tech’ category in the learn section of the Chip History Center: https://www.chiphistory.org/learn-list

Maxim: “an expression of a general principle.” – Webster’s Dictionary Maxims: Rules of Engagement for a Fast-Changing Environment or how to thrive in what is the extreme sport of business.

Make informed business decisions faster and with greater confidence

Gain sample access to the world’s most trusted source of actionable, in-depth intelligence related to semiconductor innovation and surrounding markets.