Ceva Tackles Unstructured Sparsity

February 1, 2022 - Author: Aakash Jani

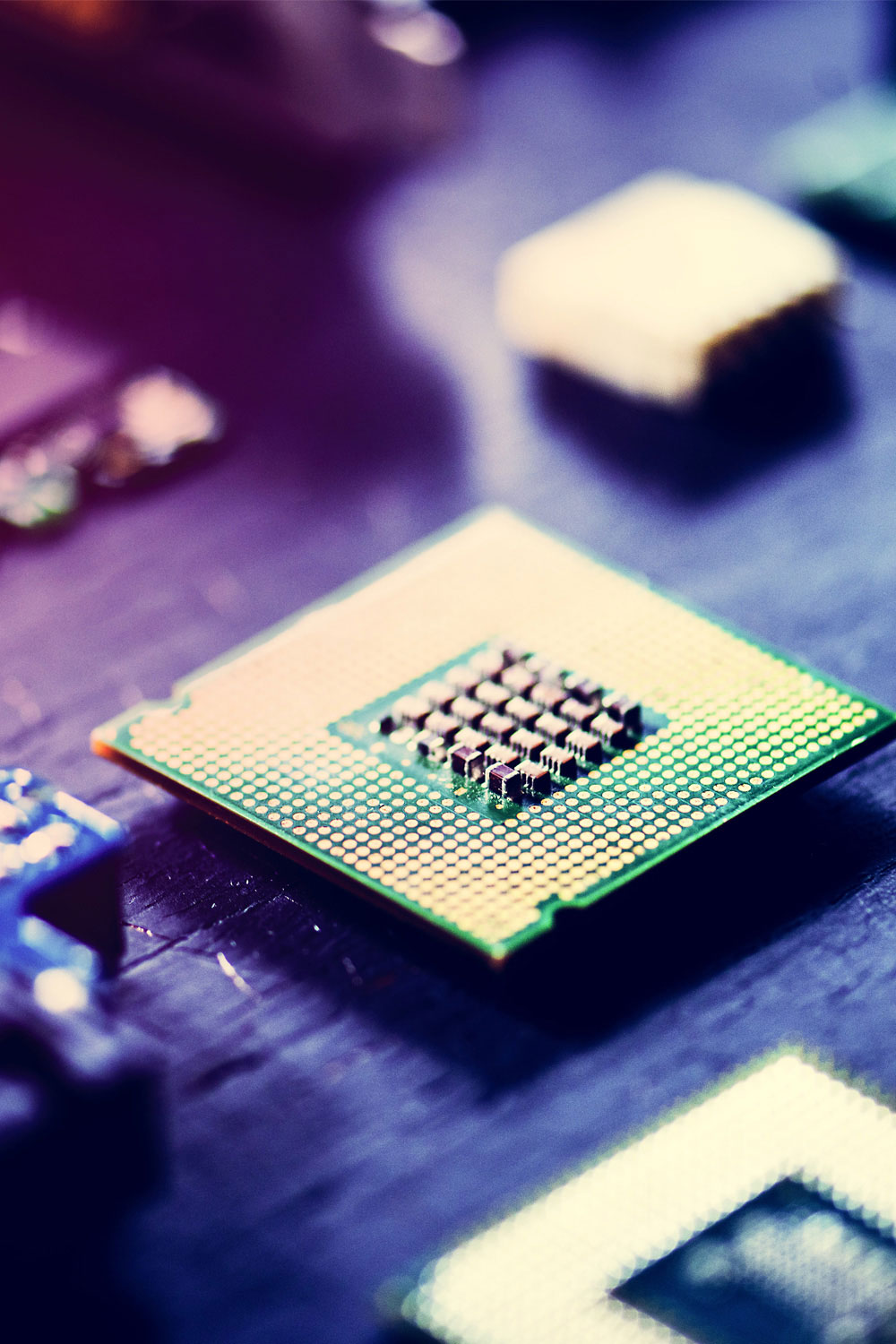

Ceva’s NeuPro-M deep-learning accelerator (DLA) offers 16x faster inferencing than the previous generation thanks to its new architecture. The licensable core showcases Winograd-convolution and unstructured-sparsity engines, which boost performance and reduce power consumption. These accelerators also keep multiply-accumulate (MAC) units busy, increasing utilization.

Each NeuPro-M engine has 4,096-unit MAC array that can achieve 10 trillion INT8 operations per second (TOPS). The new sparsity capability can greatly improve utilization of this array, deliver higher performance than competing DLAs with similar TOPS rates. The intellectual-property (IP) vendor offers scalable configurations for its new DLA. The NPM11 employs a single NeuPro-M engine. The NPM18 has eight engines, and Ceva intends to scale its NeuPro-M family to support up to eight NPM18 instantiations (64 engines). It’s already shipping RTL to lead customers and expects general production release in March.

NeuPro-M targets high-end computer vision, such as in smartphones, AR/VR headsets, and multicamera surveillance systems. The new IP also vies for Level 2 and 3 ADAS designs. The DLA features a secure enclave for firmware updates and key authentication, and it has earned ASIL B and ASIL D certifications.

DLA IP remains highly competitive, with new products arriving monthly from established competitors such as Cadence and Synopsys as well as startups such as EdgeCortix and Expedera. In this crowded field, vendors must differentiate through software, power consumption, and inference latency.

NeuPro-M replaces and builds on Ceva’s older NeuPro-S. For models with 50% sparsity, it doubles the peak performance of its predecessor. Ceva separates the DSP (vector processing unit, or VPU) and systolic array in NeuPro-S. For NeuPro-M, it integrated the VPU with other preprocessors and accelerators. Both DLAs employ the same software, however, easing the task of porting applications.

Subscribers can view the full article in the Microprocessor Report.